Experiments Overview

What this view shows

Rockerbox Experiments allow marketers to measure and compare experiments with their top platforms all within Rockerbox. This in turn allows them to better understand the full scope of their contributions and evaluate which tactics are driving the most effective results for their businesses.

Marketers run many different types of experiments in other platforms, such as creative and geo variance tests.

Create experiments in Rockerbox to start measuring lift from your test campaigns against an established baseline.

Requirements

There are several requirements in order to start using Experiments.

- Must be running campaigns in supported platforms (see below)

- Must have authorized Rockerbox to pull spend from the platforms

- Must include Rockerbox tracking parameters for each platform

Rockerbox supports creating Experiments from any API platform we’ve integrated with. You must have active campaigns, ad groups, or ads that you are running or plan to run in these platforms. You also need to ensure that you’ve authenticated access to these platforms, and Rockerbox is actively ingesting spend.

Currently the supported platforms include:

- Bing

- Snap

- TikTok

- DV360

- Adroll

- Criteo

- Outbrain

- Taboola

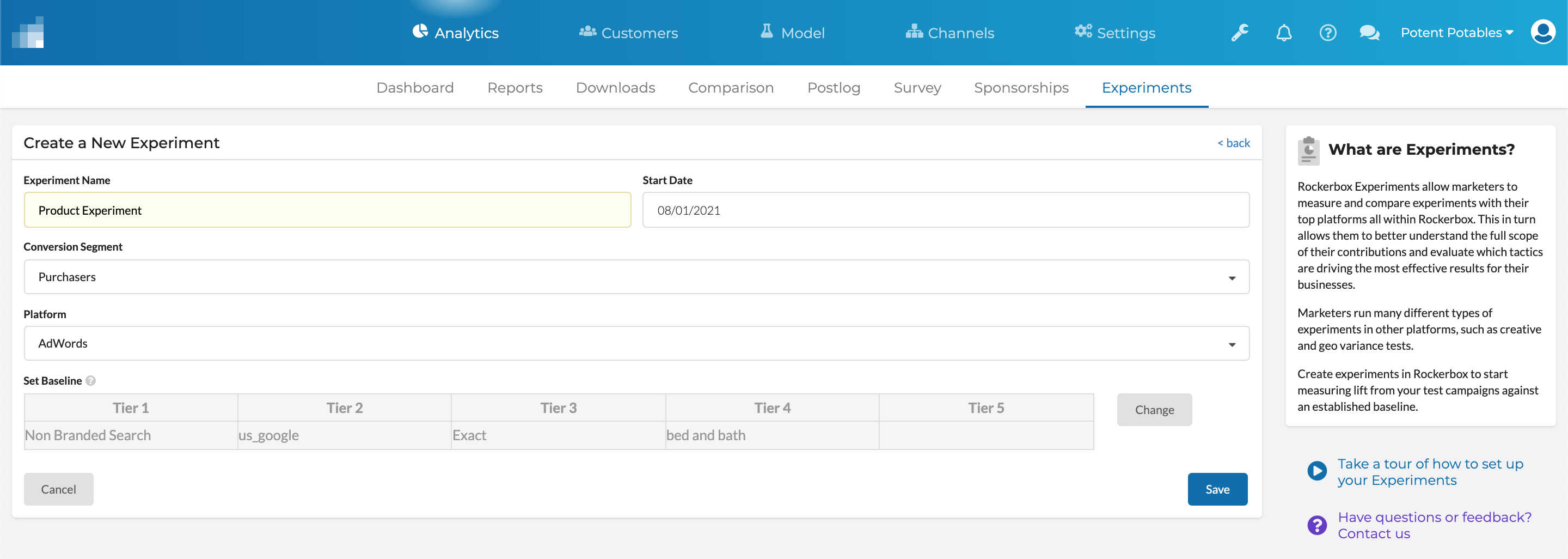

Setting Up Your Experiments

To create your first Experiment, you’ll first need to identify the platform running the Experiments, as well as your Baseline campaign. The Baseline is what each Experiment will compare against to measure lift.

Field | Description |

Experiment Name | Select a name that will help you identify this Experiment |

Start Date | Select a Start Date for this baseline. You want to select a date to get enough data but not so far back if the campaign parameters have changed significantly. (Note: this feature is only supported starting 8/1/2021. You cannot select a date earlier than that.) |

Conversion Segment | Select the conversion that you want to use. In most cases, this will be your Featured Segment. |

Platform | Identify the Platform where you are running the Experiment. (Note: this is limited to the platforms identified earlier in the Requirements section.) |

Set Baseline | Select the Baseline to use for the Experiment. All Tests will be compared against this Baseline for Lift and Statistical Significance. We recommend selecting a Baseline that has been running a long time and you know performs well. |

In this doc, we will use the term Campaign for simplicity. However, you can set Experiments at any tier, including those that use ad sets/groups and ads, depending on the capabilities of each platform. A given Experiment can only compare the same Tier, however. In other words, you can create an Experiment that compares tests from campaigns in Tier 3 to other campaigns in Tier 3 but you cannot compare a Tier 4 ad set to a Tier 3 campaign.

While selecting your baseline campaign, Rockerbox will display the name as it appears in the respective platform. Therefore, if you set a standardized naming convention in the ad platform—ex: by using “Baseline” in the campaign name—then identifying that campaign in Rockerbox will be that much easier. This also holds true for standardizing test campaign naming conventions (ex: “geo test”, “creative test”).

Adding Your Test Campaigns

Once you’ve identified your Baseline campaign, you are ready to add Test campaigns. Test campaigns are those that you want to compare against the Baseline.

Unsure of what Tests to setup? Check out this page for some examples.

Click the + Add Test button to add one or more Tests to your Experiment. Each Test will be evaluated independently.

Note: the Test “object” must fall under the same Tier as the Baseline; in other words, if your Baseline was set at Tier 3, then your Test must also be set at Tier 3. Find out more about Tiers.

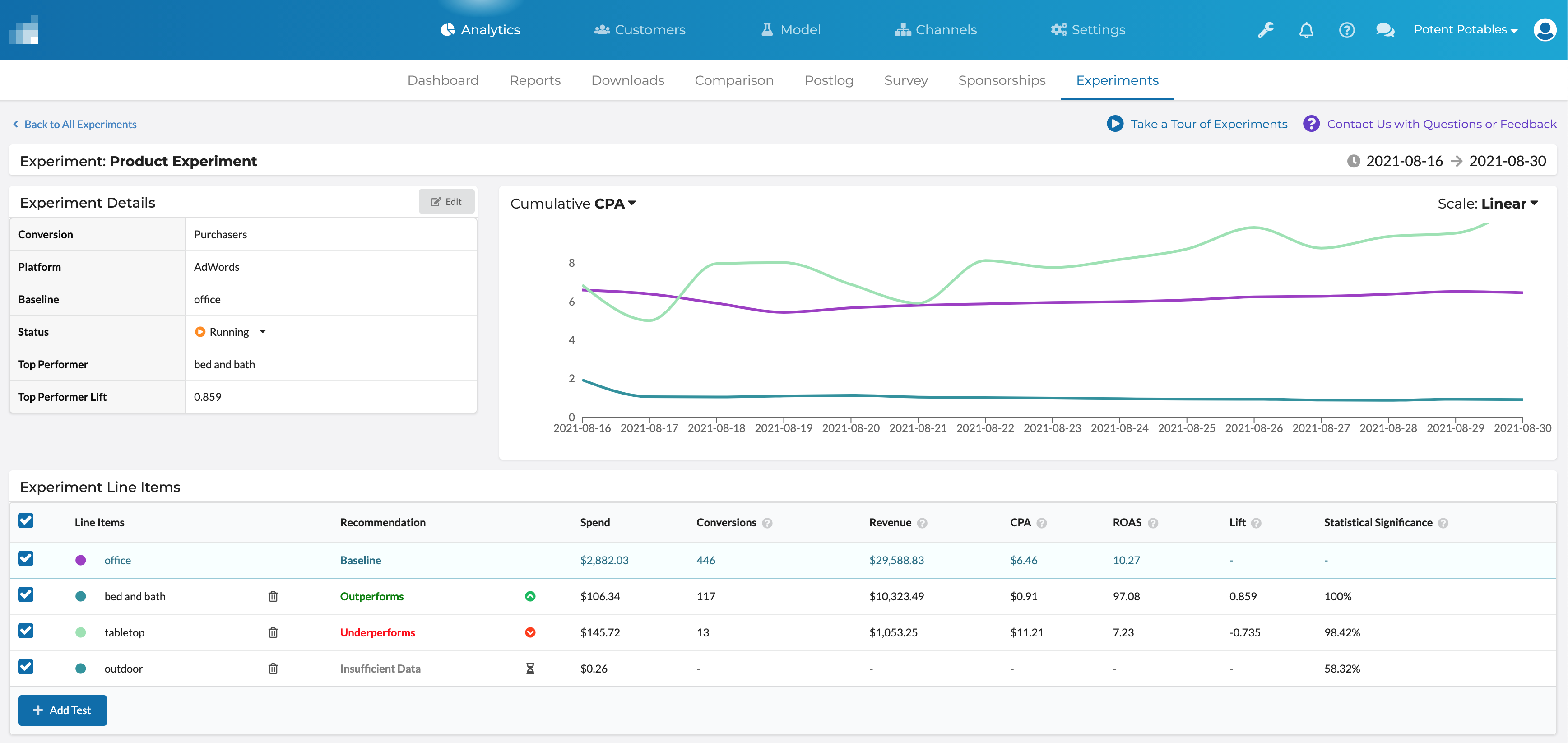

Review your Results

Once you add one or more tests, then Rockerbox will display metrics for those tests for the time period, including:

Field | Description |

Recommendation | Identifies the Baseline, or if it’s a Test, determines: - Outperforms: the Test has better CPA performance than the Baseline - Underperforms: the Test has worse CPA performance than the Baseline - Insufficient Data: there is not yet enough data to determine performance |

Spend | This is the total spend |

Conversions | This is the number of conversions where the marketing touchpoint is anywhere on the path to conversion. This is also known as “assisted conversions”. |

Revenue | The total revenue for all conversions where the marketing touchpoint is anywhere on the path to conversion. This is also known as “assisted revenue”. |

CPA | This is cost per acquisition where the cost is the total cost of marketing divided by the number of conversions where the marketing touchpoint is anywhere on the path to conversion. |

ROAS | This is the revenue over ad spend, where the revenue is the total “assisted revenue”. |

Lift | This is the improvement of the Test over the Baseline. |

Statistical Significance | This is the statistical significance of the proportion test. When a Test has 100% statistical significance, then it has reached or exceeded alpha = 0.05. |

Note: these definitions are also available in the tooltips.

Statistical Significance

Rockerbox uses a proportions test to measure statistical significance. A test will be 100% statistically significant if it meets an alpha value of .05 or better.

Find out more on this test from this external link.